Suburbia – the representation of the American dream – was once a place where people aspired to live. It represented security, stability and safety. Now suburbia is home to more than 16 million poor people.

Suburbia has the fastest growing poor population. During the 2000s, the population of poor people living in suburbs increased 64 percent and the poor population grew in 85 of the 95 nation’s largest metropolitan areas, according to The Brookings Institution.

More than 46 million people live in poverty in the U.S., according to the Census Bureau – a quarter of that number live in suburbia.

The popular understanding of poverty still collects images of people struggling in urban or rural areas, but there is not enough news coverage to show the true face of the issue.

A number of factors have left the suburbs vulnerable, including increasing populations, lower paying jobs and limited transit options. Although the federal government paid $82 billion a year across more than 80 programs to address poverty, most of this money went towards urban community development, according to The Brookings Institute. The issue in suburbs has not been adequately addressed.

School reduced or free lunches

For our final class project, we decided to look at poverty by assessing it in the lens of the school free or reduced lunch program. Food is all too often the first resource that people cut back on. Things such as housing and utilities take priority – then comes food.

Full-priced lunches cost $2.50 in Knox County elementary schools. A family-of-four must have an income of less than $43,568 to qualify for reduced-price meals or $30,615 for free meals, according to the U.S. Department of Agriculture. But USDA regulations prohibit schools from asking for proof of a parent’s income. To participate in the program, parents simply fill out a form stating their income at the beginning of the school year. Schools are required to verify a mere 3 percent of applicants. While fraud does occur, there is evidence in Knox County that the program is necessary.

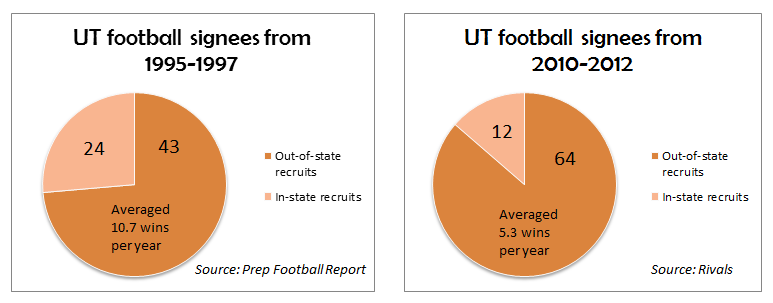

Source: Knox County Schools

In 2012, Green Magnet Academy and Lonsdale Elementary School recorded the highest numbers of elementary-aged students enrolled in the program, 79 percent and 78 percent respectively. Both schools are located in areas with some of the lowest per capita income figures in Knox County, according to Census Reporter. A. L. Lotts Elementary School and Farragut Intermediate School recorded the lowest numbers of enrollment that same year, 7 percent and 10 percent respectively. Both schools are located in areas with higher per capita income figures. Corryton Elementary School and Sunnyview Primary School’s numbers are representative of middle-of-the-road enrollment, and both schools are located in areas with similar middle-ground per capita income figures.

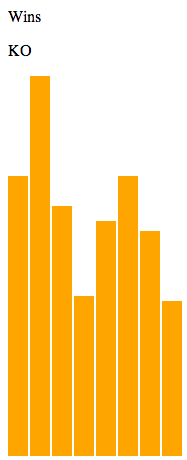

Source: Knox County Schools

While enrollment varies significantly on a school-to-school basis, fluctuations have been consistent throughout the past nine years. In 2009, Knox County Schools received $10.1 million in stimulus funds under Title I of of the 2001 No Child Left Behind Act. Twenty-three Title I classified schools existed before 2009. An additional 11 schools with temporary classification and 13 schools with short-term classification received stimulus funding. Superintendent Jim McIntyre also reduced the threshold for funding from 66.7 percent of students enrolled in the free or reduced-price meal program to 40 percent. As a result, student enrollment sharply increased across all Knox County elementary schools in 2009.

In 2012, the cost of full-priced lunches in Knox County elementary schools increased from $2.25 to $2.50 under the Healthy Hunger-Free Kids Act for equity in pricing on a national level. This resulted in another sharp increase in enrollment that continues today. At the end of 2013, 49.7 percent of Knox County students qualified for free or reduced-price meals.

Rounding out this project

Difficult topics such as suburban poverty tend to seem oblique. Humanizing this subject was definitely a hard task to manage.

Steele and I planned to interview principals of the elementary schools that we charted in color. However, we didn’t realize that the Tennessee Comprehensive Assessment Program testing would cause scheduling issues.